Topic identification, topic modelling

IA161 Advanced NLP Course, Course Guarantee: Aleš Horák

Prepared by: Jirka Materna

State of the Art

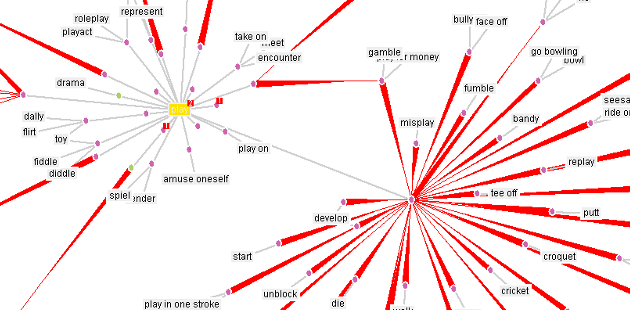

Topic modeling is a statistical approach for discovering abstract topics hidden in text documents. A document usually consists of multiple topics with different weights. Each topic can be described by typical words belonging to the topic. The most frequently used methods of topic modeling are Latent Semantic Analysis and Latent Dirichlet Allocation.

References

- David M. Blei, Andrew Y. Ng, and Michael I. Jordan. Latent Dirichlet Allocation. Journal of Machine Learning Research, 3:993 – 1022, 2003.

- Yee W. Teh, Michael I. Jordan, Matthew J. Beal, and David M. Blei. Hierarchical Dirichlet processes . Journal of the American Statistical Association, 101:1566 – 1581, 2006.

- S. T. Dumais, G. W. Furnas, T. K. Landauer, S. Deerwester, and R. Harshman. Using Latent Semantic Analysis to Improve Access to Textual Information. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, CHI ’88, pages 281–285, New York, NY, USA, 1988. ACM. ISBN 0-201-14237-6.

Practical Session

In this session we will use Gensim to model latent topics of Wikipedia documents. We will focus on Latent Semantic Analysis and Latent Dirichlet Allocation models.

- Download and extract the corpus of Czech Wikipedia documents: wiki corpus.

- Train LSA and LDA models of the corpus for various numbers of topics using Gensim. You can use this template: models.py.

- For both LSA and LDA select the best model (by looking at the data or by computing perplexity of a test set for LDA).

- Select 5 most important topics with 10 most important words, give them a name, save it into a text file and upload it into odevzdavarna.